Photo credit: Google

Quantum computing is finally starting to come into its own. “Classical” computing uses “bits” — information is coded as either 1s or 0s, vs. quantum computing which utilises quantum theory where at the very very small scale things can be either 1 or 0, or multiple states of 1 and 0 at the same time.

Naturally such concepts are hard to wrap one’s head around. Richard Feynman, the theoretical physicist, is attributed as saying “If you think you understand quantum mechanics, you don’t understand quantum mechanics”. So needless to say, if this is coming from the godfather of quantum computing, then it isn’t easy to understand…

Google recently announced their “Bristlecone” quantum chip with 72 quantum bits, or “qubits”. It has been argued that with “generalist” quantum computers, chips above 50 qubits may be able to outperform classical quantum computers at some specific tasks. So the jury is still out if this chip, and IBM’s with 50 can do this.

The pioneering D-Wave, who is the world’s first quantum computing company, has a chip with 2,000 qubits; however, their chips are build with the sole goal of finding global maxima and minima, and not generalist chips.

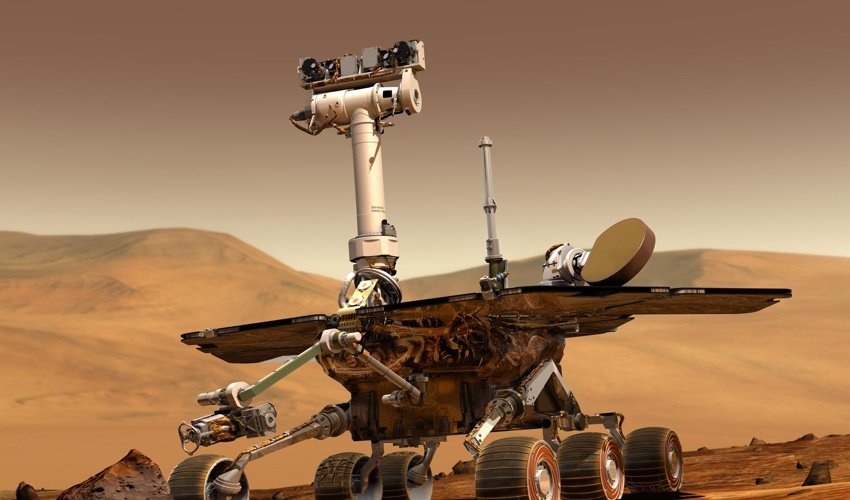

Photo credit: Science Alert

Quantum Primer

Here are some key building blocks of (a basic) understanding of what quantum computing is:

– Superposition: when something can exist in two states at once; allows for the computation of 1 and 0 — so with this property, computing power scales exponentially with the addition of each bit.

– (Quantum) Entanglement: referred to as “spooky action at a distance” by Einstein, where two (or groups) of particles can act on one another such that by simply observing the state of one we know the state of the other. I’ll leave this definition here as it is a little “other-worldly”… For additional info, you can start here.

– Coherence and “noise”: given the fickle nature of things behaving as two things at the same time, the slightest influence on the qubits can cause “noise” and “decoherence”, such that the qubits cause errors in their calculations. As a result, the chips are generally cooled to a temperature colder than deep space, with all other interference removed. As the number of qubits scales, the risk of decoherence increases; hence their is a quality vs. quantity issue — bear that in mind when you read the next headlines!

– Intractable problem: those problems for which there are no “efficient” algorithms such to solve them. Some of these problems theoretically can’t be solved using “classical” computers, but quantum computers could. But how do you know if a problem has been solved by a quantum computer, if you can’t check the results using a “classical” computer?…

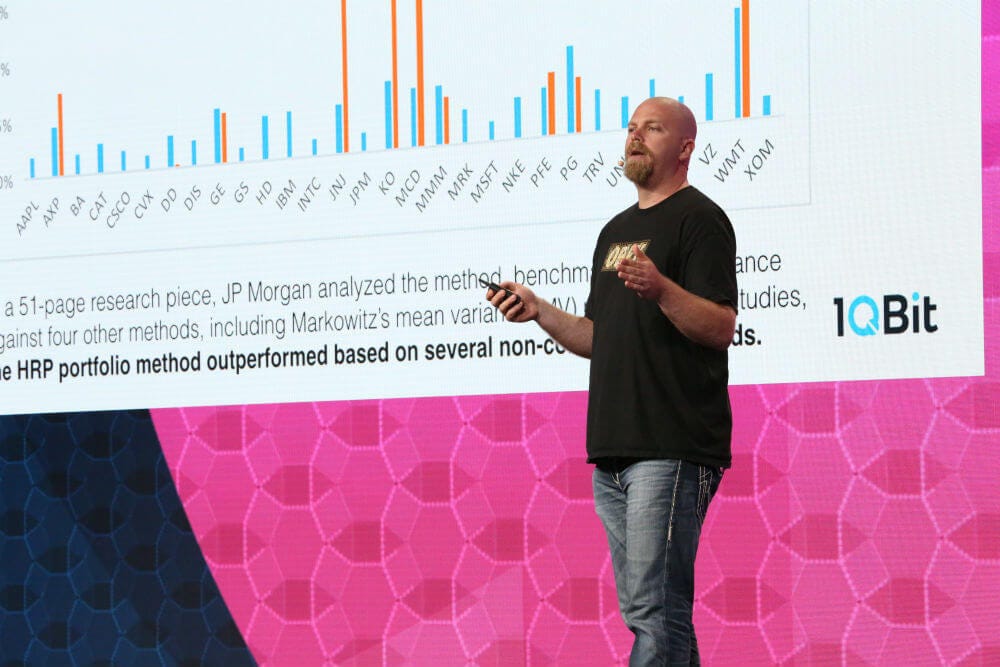

1QBit’s Andrew Fursman at Singularity University’s Finance Summit. Photo Credit: Singularity University

A Brave New World

This is an area of immense excitement, in which more companies and institutions are undertaking R&D, and commercialisation. Our investee 1QBit is one such company that is forging ahead, working with companies to become “quantum ready”. They have been collaborating with Dow in materials, Biogen in biotech, Fujitsu in other High-Performance Computing, and also supported by the likes of Accenture to help roll-out their capabilities.

As with most emerging technologies these days, progress is moving at an exponential pace, and in quantum computing this is by definition what is happening. This has massive implications for human development in the near term and beyond.